So I figured I’d start posting a little bit about what I’m up to arduino\blender-wise, mostly to try to get back in the habit.

CLOUDY VISION

Been working on a loose idea about some kind of robotish platformish kind of thing. I keep calling it robot rugby but I’m not really sure what that means at this point. I envision some kind of arena with about 4 simple bots trying to move around a puck or ball mostly by pushing it. The controls will be a closed-loop feedback from an overhead camera that can monitor each bots position and do some basic path planning.

SIMPLE BOTS

The bots are super-simple, not even bots really, just remote control things because they really don’t have any sensors of their own. I want to utilize cheap, common parts and this is what I have so far for the ‘v1’ bot. The basic capabilities of these bots are:

-motors for mobility

-led’s to visually ID each bot via color patterns

-control receiver

-battery powered and able to ‘self charge’ when low

The parts list so far is pretty simple

(1) 9g Servo – continuous rotation

(1) 2.5g Servo – standard 180deg

(1) Arduino Nano

(1) USB Battery Pack

(3) RGB LED’s

(1) IR Reciever

To get started I went with a differential drive but I’ve changed my mind on that. Here’s the differential drive version:

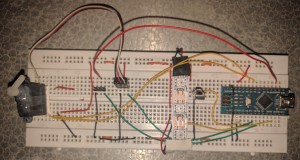

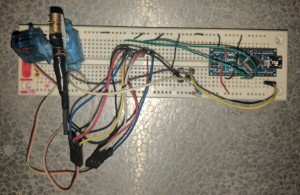

This is basically another iteration of ugbot and tweedle, but actually way simpler. I’ll probably end up calling them Tweedles though, or 790’s, and if you get those references we should hang out. And here’s the breadboard testing rig I made early on to just get all the wiring and code set up before I soldered anything.

This is basically another iteration of ugbot and tweedle, but actually way simpler. I’ll probably end up calling them Tweedles though, or 790’s, and if you get those references we should hang out. And here’s the breadboard testing rig I made early on to just get all the wiring and code set up before I soldered anything.

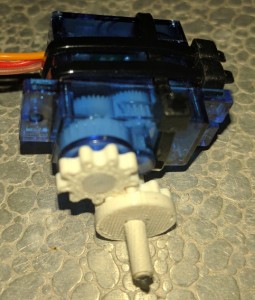

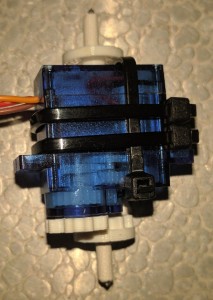

I decided to go with a ‘car drive’ for several reasons. It takes the weight off the servo drive, and I think it’s more efficient to have one drive motor instead of two, plus it means I only need one 360deg servo and those are slightly more expensive or I’d have to mod a regular servo and that’s a pain. I’m still honing the design but I’ve got a basic transmission and steering system made from little 3d printed parts. Here’s the first test print of the transmission. It’s super-simple, gear ratio is 1:1 and it’s strapped on with zip-ties. I was going to glue it but the zip ties magically worked so I think I’m going to plan on using those in the next iteration of the print.

CONTROL

I decided to use IR control because it’s by far the cheapest as far as parts go, talking about less than a dollar compared to BT, RF or WiFi which can get to several dollars and adds a lot of unnecessary complexity. Though I probably will play with those ESP8266 WiFi modules at some point, I’ve already ordered a couple to mess with since they’re only a couple of bucks.

I don’t really need manual control for the ultimate goal of this, since this is really all an excuse for me to play with computer vision and visual servoing concepts and code, but it makes sense to create a manual controller just for testing.

I wasted a lot of time screwing around with a ‘funduino’ board, but it ate up too many pins and one of the buttons never worked so I got frustrated with it. At some point I realized I had a wii nunchuck and a wiichuck adapter already, so I put them to use. The wiichuck is I2C so it only uses 2 data and 2 power pins, way better than the 8 or so pins the funduino required. I wish I’d thought of that before I wasted like $20 on the funduino and an arduino uno to use with it. Here’s the wiichuck IR control rig:

IR CODE

I had no idea what I was getting into. I’d played with RF and BT modules before, I thought IR control would be simple, and it kind of is, but it’s also kind of a nightmare.

A little backstory on the IR thing- I’ve also been kind of wanting to control flying things via computer vision for a long time- specifically these little cheap IR controlled helicopters. Quads are fun too, but the tiny ones are really hard to control, have terrible battery life, and are more expensive than I’d like. The little IR helicopters are surprisingly easy to fly, cheap, and have decent battery life for a flying thing. Going with IR for the bots was a dual purpose decision, it was cheap for the bots, but it also could lead into controlling the helicopters too.

I started to look into LiRC, but honestly I just got confused, I don’t think that was meant for what I’m trying to do. I slapped and IR LED onto a Raspi and messed around with it awhile, got it to send a few codes, but I just didn’t like it, so I went back to the arduino to actually fire the IR LED. I figured I’d set it up so the PC sends serial commands to the arduino and it fires those off in IR language. I didn’t realize IR language was such a pain in the neck to learn and speak.

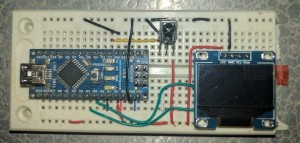

I won’t go through the whole process, a lot of it involves me being an idiot, making stupid assumptions and spending long hours learning how stupid those assumptions were and why they were stupid. This is one of the tools I made to help me stop being stupid. It’s just an IR receiver and an OLED screen I thought I could use to analyze IR codes. It really hasn’t helped that much, but I do like these little OLED screens a lot now. Here’s that thing:

Controlling the bots turned out to be fairly straight-forward. I just used the NEC protocol to send 6 byte chuncks that encode the bots motor states and ‘ID’ (because I expect to be controlling multiple bots with one IR LED), and another byte in case I need it, maybe for RGB LED states or something. It actually wasn’t as straight-forward as all that because the NEC has these repeat code that are just weird and I didn’t know they were happening at first and thought the receiver was junk or something.

I thought controlling the helicopters would be a matter of pointing the remote at my receiver and reading the codes and figuring out the protocol. That didn’t work. The arduino IR library I was using (IRLib2) had no idea what those codes were. Long story short I finally broke out my little oscilloscope, made a cheap-o IR probe from an IR LED and a broken headset jack. I’m still figuring out the exact protocol these things use but at least now I can see the actual pulses and decode them myself. So far I can get the helicopter to turn its light on and off but even that doesn’t always work. Here’s my trusty gabotronics xprotolab portable with the probe and the helicopter controller- pretty much the best kickstarter thing I’ve backed. This thing is great, and it was like $80 through the campaign.

WHY NO CODE YET?

Because at the moment it’s not worth posting and I am surprisingly lazy. I’ll post print files and code when there’s anything really worth posting, but if by some bizarre chance you’re working on something similar and give no flips about the state of the code I’d be happy to share.

LATER

I have yet to actually control a robot via computer vision, but that’s part of the long term goal of this. I have played with a little of the code required just to see what I’m getting into. I downloaded VISP Visual Servoing Platform and went through a few tutorials. I realized pretty quick it wasn’t what I needed, or it was a lot more than I needed. It also meant I’d be coding in C++ and I was okay with that but it wasn’t my preference. I realized most of the functions I needed where actually OpenCV so I just went to that, and I can use python, which makes my life much easier.

Since I know I’ve got a lot of work to do on the bots I thought I’d make an even simpler platform to test object tracking. So I put together this little rig that has 2 servos with a laser diode slapped on it so I can just point it and a camera at a wall and start tracking the movements of the laser and control it with visual feedback. Here’s that little rig:

This is really nothing so far, I’ve just been randomly poking at this idea for a few months and decided I should post something to try to get back in the habit of posting stuff I work on. I would be nowhere with my arduino stuff if it weren’t for other people’s little blogs and sites so I figure I’m obligated to offer the same to some other random shmuck trying to fudge their way through an idea that’s really way over their head but they dive in anyway. Here’s to you, shmuck.

WAIT, WHAT?

Yeah- I know. I’m not really sure either.

I’ll post more on this project as it evolves, but it is just kind of an cloudy vision of an idea so don’t hold your breath for anything spectacular. I think the old progranimals and lightning stuff was actually cooler and I should probably be developing that stuff more, but this is what I feel like doing now so I am.