Still not sure where I’m headed with this puppet\animatronics stuff but I’ll keep doing it as long as it’s fun and\or I can’t stop. Really hoping to find some help with the crafting side of this because I kind of hate all the gluing but this happened so here it is.

Haven’t posted in over a year so figured I should write something.

I didn’t move funkboxing to self-hosted. My home internet has been garbage since I got my house so I don’t think that’ll work but at some point I’ll probably migrate to wordpress hosting because godaddy is overkill at this point.

I got a bug to be a little more social so I’ve been playing D&D with a group on Mondays since about March. I definitely should have started doing D&D sooner. I semi remember playing with my father and brothers when I was very young but I don’t think D&D makes a lot of sense for children. I also joined an improv group that’s been a lot of fun. This past weekend I pitched an improv show called ‘NerdProv’ focused on ‘nerd culture’ and they liked it so we’re going to be working on producing that. Also been volunteering with BREC and CAA but mostly that involves standing around and chatting with people but they say they appreciate me showing up so I keep doing it and having fun. I especially love doing BREC events at the zoo, peacocks are hilarious.

Project wise I’ve been kind of lazy since I became a home-owner. Or rather my home is my project and I don’t really feel like posting about home-improvement stuff. I have gotten back into some LED display ideas for D&D miniatures but some of that is just an excuse to put my box of LED stuff to use. I got a welder and safety gear but I still haven’t even tested it in because I’m afraid of blowing my entire breaker box but I’ll talk myself into giving it a go at some point.

Somewhere between the D&D stuff and the Improv stuff I got interested in puppets, then got discouraged by how hard it is to sync the mouths, then decided to jam a servo in it so I didn’t have to. This is v1 of a little servo-puppet system I’m working on. I’ll try to be a little better about posting stuff like this to funkboxing.

One of the few people that might have checked this site passed Saturday night. Mark Jeff Murray. He was my neighbor for almost 10 years at Jefferson Oaks and we kept in touch when I moved. We used to talk all the time, he’d be out on his porch reading on weekends and we’d chat about sci-fi and the future of humanity and politics and physics and everything in between. I’d just had coffee with him 3 weeks ago, the day after his last day of work ever. He’d retired from LSU and was looking forward to having lots and lots of time off to do whatever he wanted. Jeff was a very kind and intelligent man and was one of the few people I’ve ever met that could keep up with my imagination without breaking a sweat. I’m proud and privileged to have called Jeff a friend and I’ll miss him a lot. He deserved more time.

I’ve been planning to migrate funkboxing to self-hosted. I’m not sure about the timeframe but it’s coming up on my project list so it’ll be sooner than later.

With luck nobody would even notice but more likely the site will go down for a while for me to figure out what I’ll inevitably do wrong with the DNS or whatever.

Just a heads up in case any of the 6 people that visit this site once a year come by.

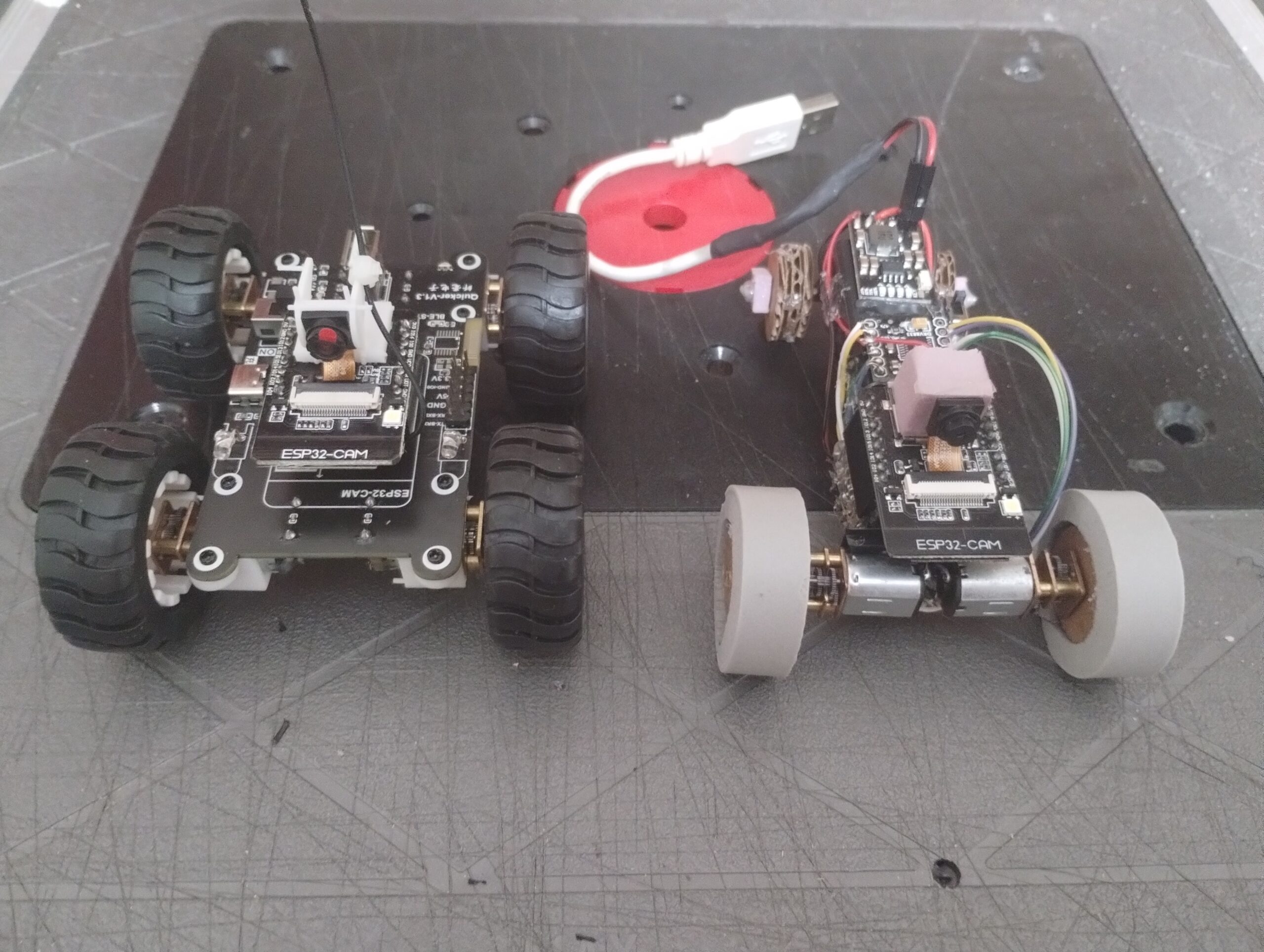

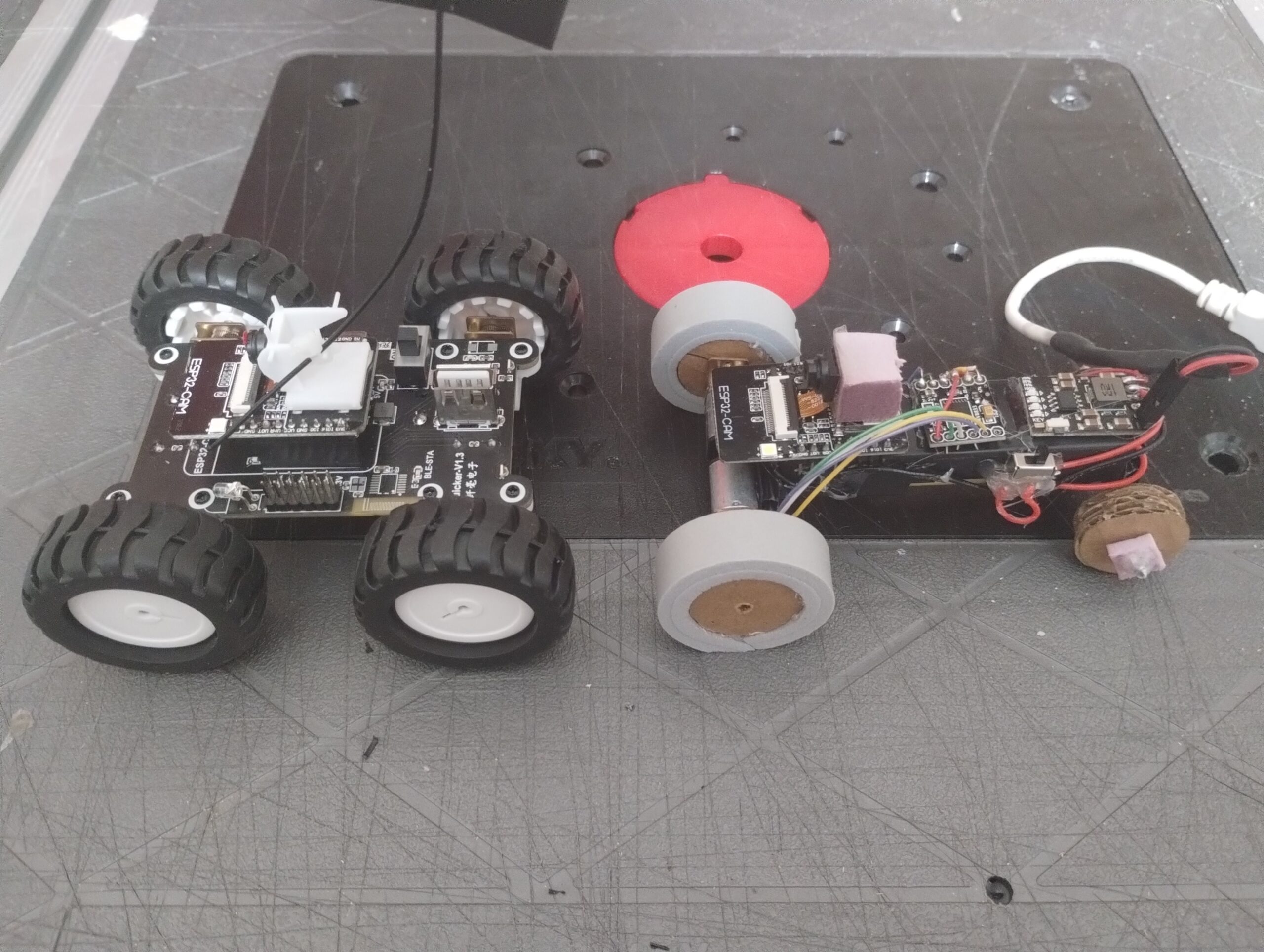

I never improved my ‘ESPCreep’ (https://github.com/funkboxing/espcreep) Servo Drive robot car, mostly because the 3d printing issues were tiresome, but I still wanted a simple drive platform and kept looking around. I found this pretty nice little ‘Quicker’ package on Aliexpress (on the left). https://www.aliexpress.us/item/3256803512050979.html.

After looking at it online for about a year I finally talked myself into ordering one. A month later it arrived and I didn’t trust whatever they flashed from the factory and had trouble getting the source code because it was on Baidu and in Chinese. From the one pdf I could access and some googling I found the code must have been derived from this: https://www.pilothobbies.com/product/scout32/. So with that in hand I was able to flash a working control webserver. I was pretty happy with the purchase but after driving it around for about 5 minutes I started thinking about making my own.In the past I’d chosen 360deg servo drive because on paper it’s a great solution. One PWM line per motor controls both speed and direction. Problem is you’re limited to a servo’s power and package, which aren’t ideal. Also you have to find the ‘center\stop’ position on each servo and it’s never exactly zero. DC motors need a motor controller and that increases the part count.

But seeing the ‘Quicker’ car and code I saw it’s pretty easy to work with DRV8833 H-bridges. It’s only 2 PWM per motor and you get all the power and options of using DC motors. Also I happened to have a bunch of HW-627 DRV8833 modules I got for steppers so it wasn’t hard to start breadboarding it all up. Also had some boost-charge modules and some 18650 batteries and holders.

So after breadboarding success I soldered\glued the monstrosity on the right together. Though the wheels are literally made of garbage it moves around okay. After that I remembered Fritzing and OSH Park exist so I slapped together a PCB and sent off to have 3 made for $20, which includes expedited production.

Let’s just take a second to think about how awesome it is that things exist that let me write that last sentence, and that it only cost $20.

So while I’m waiting for that to come in I’ve got some other notions about a ‘Modular Robotic Funhouse’ that I need to play with. I’ll post more on that later. I’ll post the code later, right now it’s still just the Scout32 code with ArduinoOTA added for convenience.

Here are the parts:

ESP32-Cam

HW-627 (DRV8833 Dual H-Bridge)

HW-775 (Boost-Charge)

18650 Battery holder and battery

(2) N20 Motors